Gone are the days when banks used to store customer information (such as names, photographs, and specimen signatures) in individual postcard-like datasheets. That was an era where thick registers were used in different government offices like post offices, property tax collection centers, etc. to store customers’ details or maintain the daily attendance records of employees.

If an employee had to update any of the registered customer’s details, the task could take up the whole day. Hours were wasted searching for that particular customer’s details and then creating a new record to replace the old one.

The customers, too, had to wait for hours for such minor tasks to be completed. Apart from the tediousness of searching for data from piles of ledgers, such paper files could be lost at any time due to disasters like floods or fire, apart from the degradation of the very paper on which the data was recorded.

The story is different in modern times. Almost every task, even the trivial ones are getting automated. Automation and speeding up requires data to be processed at a much faster rate. That’s where the term “Big Data” comes in.

Contents

5 Best Big Data Tools

Big Data is referred to any data that is large in volume, changes quickly, and has a vast variety. The tools that are used for big data management range from DBMS to full-fledged software tools having mining and warehousing tools integrated.

There are a lot of vendors providing a wealth of great tools. So great that it becomes confusing to choose one over the other. I have gone through some sites on this issue of selecting the “best ones” and also asked some experts in the domain. The result is this list of tools with a short description of each tool.

1. Apache Hadoop

Apache Hadoop is the most prominent and used tool in the big data industry with its enormous capability of large-scale processing data. This is a 100% open-source framework and runs on commodity hardware in an existing data center. Furthermore, it can run on a cloud infrastructure. Hadoop consists of four parts:

- Hadoop Distributed File System: Commonly known as HDFS, it is a distributed file system compatible with very high scale bandwidth.

- MapReduce: A programming model for processing big data.

- YARN: It is a platform used for managing and scheduling Hadoop’s resources in Hadoop infrastructure.

- Libraries: To help other modules to work with Hadoop.

2. Apache Storm

Apache Storm is a distributed real-time framework for reliably processing the unbounded data stream. The framework supports any programming language. The unique features of Apache Storm are:

- Massive scalability

- Fault-tolerance

- “fail fast, auto restart” approach

- The guaranteed process of every tuple

- Written in Clojure

- Runs on the JVM

- Supports direct acrylic graph(DAG) topology

- Supports multiple languages

- Supports protocols like JSON

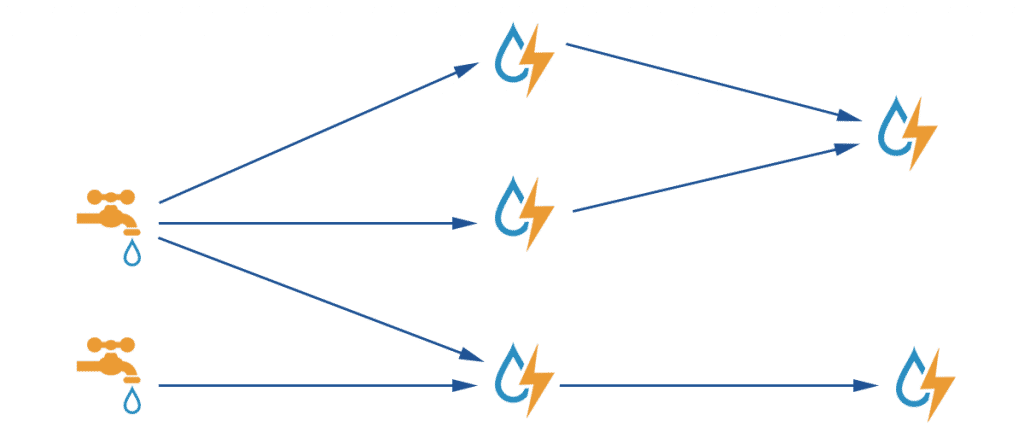

Storm topologies can be considered similar to the MapReduce job. However, in the case of Storm, it is real-time stream data processing instead of batch data processing. Based on the topology configuration, the Storm scheduler distributes the workloads to nodes. Storm can interoperate with Hadoop’s HDFS through adapters if needed which is another point that makes it useful as an open-source big data tool.

3. Cassandra

Apache Cassandra is a distributed type database to manage a large set of data across the servers. This is one of the best big data tools that mainly processes structured data sets. It provides highly available service with no single point of failure. Additionally, it has certain capabilities which no other relational database and any NoSQL database can provide. These capabilities are:

- Continuous availability as a data source

- Linear scalable performance

- Simple operations

- Across the data centers easy distribution of data

- Cloud availability points

- Scalability

- Performance

Apache Cassandra architecture does not follow master-slave architecture, and all nodes play the same role. It can handle numerous concurrent users across data centers. Hence, adding a new node is not matter in the existing cluster even at its uptime.

4. KNIME

Also called Konstanz Information Miner, this is an open-source data analytics, integration, and reporting platform. It integrates different components for data mining and machine learning through its modular data pipelining concept.

A graphical user interface allows the assembly of nodes for data pre-processing (which includes extraction, transformation and loading), data modeling, visualization, and data analysis. Since 2006, it has been widely used in pharmaceutical research, but now it is also used in areas like customer data analysis in CRM, financial data analysis, and business intelligence.

Features:

- KNIME is written using Java and is based on Eclipse. It makes use of its extension capability to add plugins, hence providing additional functionality.

- The core version of KNIME includes modules for data integration, data transformation as well as the commonly used methods for data visualization and analysis.

- It allows users to create data flows and selectively execute some or all of them.

- It allows us to inspect the models, results, and interactive views of the flow.

- KNIME workflows can also be used as data sets to create report templates, which can be exported to different document formats like doc, PPT, etc.

- KNIME’s core architecture allows the processing of large data volumes which are only limited by the available hard disk space.

- Additional plugins allow the integration of different methods for image mining, text mining as well as time series analysis.

5. R Programming

This is one of the widely used open-source big data tools in the big data industry for statistical analysis of data. The most positive part of this big data tool is – although used for statistical analysis, as a user you don’t have to be a statistical expert. R has its own public library CRAN (Comprehensive R Archive Network) which consists of more than 9000 modules and algorithms for statistical analysis of data.

R can run on Windows and Linux servers as well inside SQL servers. It also supports Hadoop and Spark. Using the R tool one can work on discrete data and try out a new analytical algorithm for analysis. It is a portable language. Hence, an R model built and tested on a local data source can be easily implemented in other servers or even against a Hadoop data lake.

That’s all. If you think I’ve missed an important tool that should be on the list, let me know in the comment section below.

![Top 5 Big Data Tools in 2023 [Free and Open-Source] big data](https://www.technotification.com/wp-content/uploads/2018/06/big-data-tools-open-source-768x424.jpg)