TensorFlow is an open source platform for machine learning. It provides a complete, extensible ecosystem of libraries, tools and community support to build and deploy machine learning powered applications.

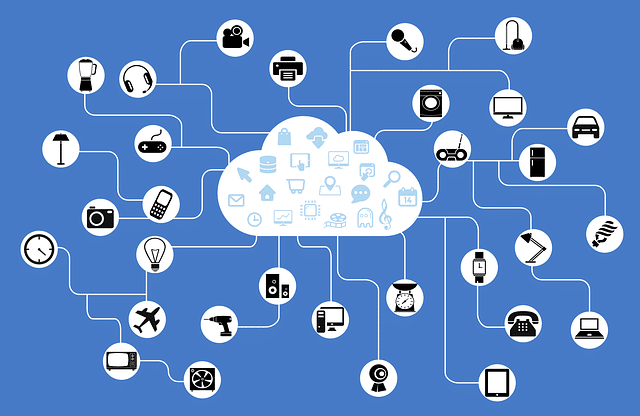

The search giant Google has launched TensorFlow Lite 1.0 for developers who are working on artificial intelligence models for mobile and IoT devices. The lite version has some useful improvements such as selective registration, image classification, and quantization during and after training for both faster and smaller models.

Just like most of the AI models, TensorFlow lite also begins with training. After that, it’s converted to create Lite models for mobile devices.

It was first announced in Google I/O 2017, then in the developer preview of 2018. The TensorFlow engineering director Rajat Monga said:

“We are going to fully support it. We’re not going to break things and make sure we guarantee its compatibility. I think a lot of people who deploy this on phones want those guarantees.”

Read: Top 5 Programming languages for Making IoT Projects

The main motive behind TensorFlow lite is to speed up AI models and shrink the unwanted processes for edge deployment. The lite version is designed with model acceleration, Keras-based connecting pruning kit, and other quantization improvements.

Besides this, the Lite team has also shared its roadmap for the future with a few upcoming changes such as control flow support, several CPU performance optimizations, more details of GPU delegate operations and lastly finalizing the API to make it generally available.

As per the TensorFlow Lite engineer Raziel Alvarez, TensorFlow Lite is deployed by more than two billion devices today. He also added that as the use of TensorFlow lite will increase, it will definitely make the TensorFlow mobile obsolete. Some users may utilize it for training but not as a solution while working.

Also Read: 5 Free Online Courses to Learn Artificial Intelligence

Experts are exploring numerous ways to reduce the size of AI models and optimize them for mobile devices. Some of them are as follows:

- Mobile GPU acceleration with delegates that can make model deployment up to 7 times faster.

- Edge TPU delegates that are able to speed up the things 64 times faster than a floating point CPU.

TensorFlow Lite can also run on Raspberry Pi and new Coral Dev board launched a few days ago. Google has also released the TensorFlow 2.0 alpha, TensorFlow.js 1.0 and TensorFlow 0.2 for Swift developers.

Just for your information, Google apps and services like GBoard, Google Photos, AutoML, and Nest also uses TensorFlow Lite. It basically does the whole job when you ask Google Assistant something when it’s offline. Overall, the TensorFlow team has done a lot of backend work to enhance its usability and feasibility.