The modern business approach is data-driven. In this fast-moving world, businesses generate large amounts of data every second. Businesses must have the ability to process and analyze data in real-time if they are to stay up with this trend.

Businesses now depend heavily on real-time data processing and streaming to gather insightful knowledge and make wise decisions. Real-time data will become increasingly vital for those needing immediate actions and leveraging insights from data. A real-time data example allows us to process data streams as it is generated.

This is achieved without data delays or batching that processes data in batches at regular intervals.

Perfect examples of real-time data example applications include social media feeds, financial transactions, real-time analytics, and Internet of Things systems.

As data drives everything, transmitting data between regions is complex, and handling real-time data is significantly more challenging. And therefore, to address this issue, Kafka, an ideal distributed data streaming platform, is a popular open-source solution for real-time data processing.

Kafka is commonly used to build Kafka real-time example applications to manage and process streams of data. It works as a messaging platform to facilitate communication between different parts of an application or between different applications.

Aside from Kafka real-time examples, Kafka is still used for services such as log aggregation and processing, stream processing and analytics, event-driven architectures, and messaging queue systems. In this post, let’s explore Kafka real-time examples to understand when to use Kafka for real-time data processing and streaming

Contents

What is Kafka best known for?

Why would we need a message broker for data-driven applications? Well, Kafka is a message broker. It was initially created by LinkedIn engineers to internally process and manage large streams of data generated within LinkedIn. This came after message brokering, such as RabbitMQ, failed to scale while maintaining real-time data processing.

The potential of Kafka was beyond LinkedIn, and in 2011 got released under the Apache Software Foundation and later became one of the most popular data streaming platforms. According to a stack overflow survey, Kafka appears to be of the most paying technologies in the fields of big data and data streaming skills.

Kafka has unique features compared to other messaging services. It:

- Handle high volume, high throughput, and low-latency data streaming at scale.

- Scales horizontally across multiple machines.

- Offers data storage to manage and real-time data processing.

- Storing data lets multiple services read the same messages, leading to highly fault-tolerant and resilient characteristics.

- Has the capacity to monitor who consumes what amount of the data stream and even records where you left off so you may return to it later.

Kafka creates a highly scalable, fault-tolerant, and durable data pipeline for streaming data to solve the problem of analyzing and processing data in real time. This is a perfect fit for managing high-volume data streams with minimal latency. This makes Kafka ideal for use cases that process streams in real time such as

- Real-time analytics to produce companies’ information about their clients and processes.

- Messaging systems allow different components of a distributed architecture to communicate among themselves.

- Log aggregation collects and aggregates logs from multiple systems to monitor and troubleshoot distributed systems.

- Stream processing allows data to be processed and analyzed as it is generated.

Is Kafka really real-time?

Well, the biggest question we are asking ourselves here is whether Kafka is a real-time example. But what is real-time? A real-time application processes events without delay and latency. It delivers data as soon as it is available without buffering and waiting.

Kafka is real-time. It is designed to process millions of messages per second. Kafka uses its distributed architecture and writes data to disk before processing it. It’s good to ask ourselves a question; a database still saves data, but does it achieve real-time data processing? Kafka and an actual database are complementary and have different trade-offs.

Real-time capabilities are subject factors, such as data pipeline complexity, the total number of interconnected services, system hardware, and the network infrastructure. The conclusion to this comes from how fast the data is being processed to achieve reactive computing. If we wanted to achieve real-time data processing with a database architecture. The amount of cash and infrastructure we’d need to invest in a database to give it the speed and throughput of Kafka would be virtually impossible.

Kafka architecture is built ground up with asynchronous connections, clusters (multiple brokers), replications (data replicas), persisted storages, and partitions. The overall approach ensures reliability and availability. The core achievements allow us to process much data reactively while ensuring high throughput and low-latency data streaming at scale.

How is Kafka used for real-time data streaming?

The further we look into the future, it makes us believe companies need real-time data as an essential part of their business reacting to real-time data insights. Kafka delivers and provides context that assures us that managing data at scale is a problem that will disappear. Real-time data will become more and more essential to those who want to act instantaneously. Let’s explore Kafka’s real-time data examples and companies that use it to archive real-time data streaming and processing.

The common companies that use Kafka are LinkedIn, Airbnb, Uber, and Twitter, to name a few. Kafka is not limited to these industries. We can use it as long as we need to handle high volumes of data in real-time.

Take the example of Netflix, a movie streaming platform. It requires a real-time data approach to process streaming events. We might ask ourselves if Netflix uses Kafka. Its use case fits a Kafka model as a de facto platform for event streaming to process data.

Netflix has an annual content budget worth $16 billion. Based on this, we cannot make decisions based on intuitions. Netflix content uses cutting-edge technologies to process and make decisions based on the massive amounts of data on its about 203 million subscribers’ behaviors. This means a company of such magnitude needs a technological backbone that creates data-driven decision-making at such a massive scale.

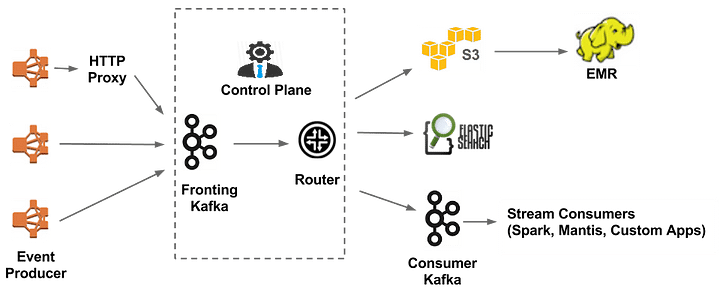

Netflix uses a Keystone Data Pipeline that processes about 500 billion events per day. Its Keystone pipeline creates unified event publishing, collection, and routing infrastructure for batch and stream processing. Kafka clusters are the backbones of the Keystone Data Pipeline.

Netflix uses at least 36 Kafka clusters with 4,000+ broker instances that ingest 700 billion messages daily.

Kafka still has many usecase. Companies such as Spotify were built on top of Kafka. Spotify has over 200 million users, with 40 million tracks available and podcasts on its streaming platform. However, recently, its event delivery system migrated from Kafka to Google Cloud Pub/Sub.

Conclusion

Application Kafka in the real-time example is broad. Its ability to handle large volumes of data with high-throughput processing assures us a perfect approach to building scalable, real-time data pipelines. Popular industries such as:

- Banking uses Kafka for real-time streaming data transmission and analysis to handle transaction processing, market data streaming, risk management, and identifying scams such as money laundering and illegal payments.

- Gaming relies on real-time data processing and event streaming. They leverage Kafka to process high volumes of data in real-time to enable real-time gaming at any scale.

- Healthcare fields rely on massive amounts of data. They use Kafka to create AI-driven digital doctors that rely on such data architecture. Health care is connected between devices to use real-time IOTs data for remote monitoring. Healthcare serves many patients and creates big data patient research and analytics of life of science.

Kafka is great; ensure to check when TO and NOT to use Kafka when it comes to real-time stream processing.