ChatGPT has rapidly gained popularity in the tech industry, impressing users with its capabilities and regular updates. However, even with its advanced technology, the current version still faces certain limitations. One significant constraint is the ChatGPT Token system, which hampers the progress of the application and reduces its overall usefulness. For individuals utilizing ChatGPT, it becomes essential to understand the concept of tokens and how to handle situations where they are depleted.

Contents

What’s a ChatGPT Token?

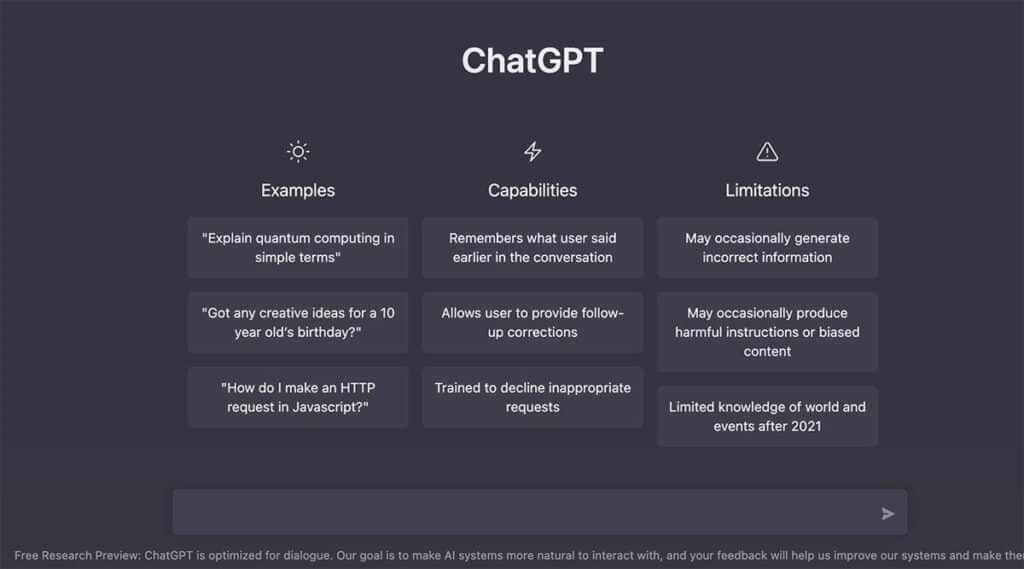

If you go by the app’s façade, all you see is a computer language that obeys your commands and gives you the answers you seek. However, the backend is just lots of code, learning every minute, and getting up to speed with the questions it answers. ChatGPT converts each word into a legible token whenever you ask a question. To break it down further, tokens are text fragments, and each programming language uses a different set of token values to understand the requirements.

As computers do not directly comprehend text values, they employ a process of converting them into numerical representations known as embeddings. These embeddings can be likened to lists in programming languages like Python, consisting of a sequence of related numbers, such as [1.1, 2.1, 3.1, 4.1, …, n].

When ChatGPT receives an initial input, it leverages this embedding representation to anticipate the next probable user input based on the information it has received so far. It considers the entire sequence of prior tokens and, using its programming logic, attempts to predict the subsequent user input. By predicting and processing tokens one at a time, the language model simplifies the understanding and utilization of the input.

To enhance its predictions and enhance accuracy, ChatGPT utilizes transformer layers that process the entire sequence of embeddings. These transformer layers are a specific type of neural network architecture trained to identify and establish connections between important words within a string of text. For instance, when presented with a question like “Who’s Thomas Edison?”, ChatGPT identifies the keywords “Who” and “Edison” as significant.

The training of transformer layers is a complex and time-consuming process involving the use of large amounts of data spanning gigabytes. Despite ChatGPT predicting only one token at a time, its auto-regressive technology enables it to make predictions and feed the output back into the primary model. This process occurs sequentially, with the output being generated and printed one word at a time. The output generation concludes automatically when the application encounters a stop token command.

Free vs. Paid Tokens

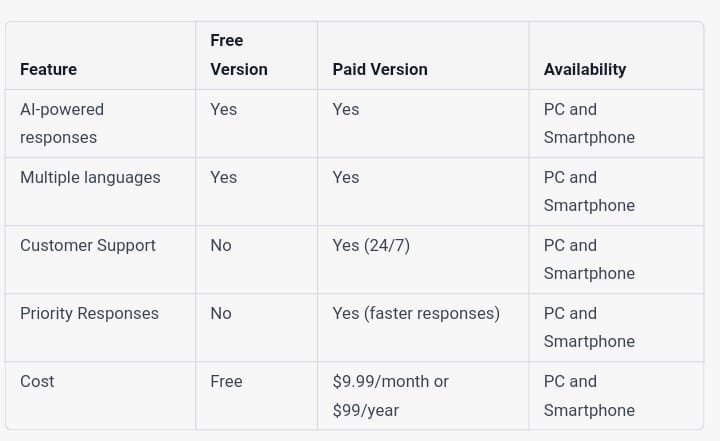

OpenAI provides a taste of the ChatGPT application through a complimentary token subscription with certain limitations. This free subscription allows users to explore and experiment with the ChatGPT API. Additionally, OpenAI offers a $5 credit, which is valid for three months, allowing users to experience the API further. Once the token limit of the free subscription is reached or the trial period expires, users can choose to pay as they go. Opting for the pay-as-you-go option increases the maximum token quota to $120, enabling greater usage of the application.

Should You Opt for Paid Subscription?

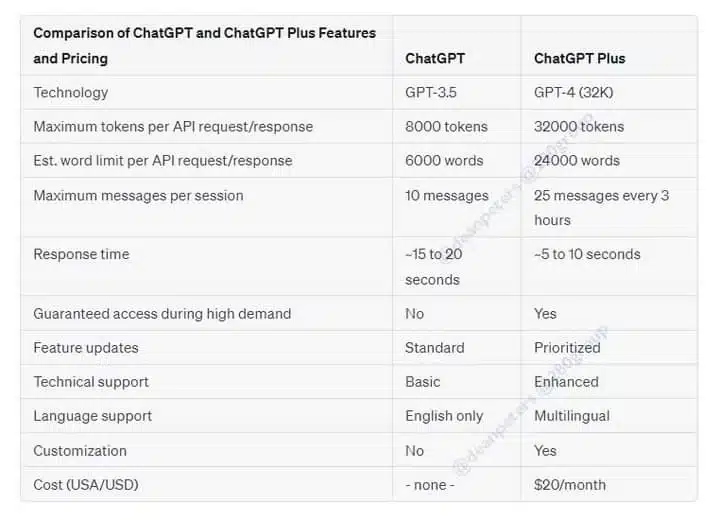

For users seeking an elevated experience, OpenAI offers the ChatGPT Plus Offer, available for a monthly fee of $20. This paid subscription provides several notable features that cater to the widespread usage and popularity of the AI model. These features include:

- Continuous Access: Users with the ChatGPT Plus subscription can utilize the service even during periods when the website experiences high traffic or temporary downtime due to a surge in users.

- Enhanced Response Rates: Subscribers to ChatGPT Plus enjoy improved response times, enabling faster and more efficient interactions with the AI model.

- Early Access to New Features: Paid subscribers gain early access to new features and updates as they are released, allowing them to stay at the forefront of the latest advancements in the application.

If you find these features appealing and aligned with your requirements, you can opt for the ChatGPT Plus paid subscription and immediately benefit from these enhanced functionalities.

Is ChatGPT Token Limit a Thing?

To manage the output length and effectively utilize tokens and credits, each model provides the option to set a maximum number of tokens for each query. This feature enables control over the length of the generated output in a single API call, preventing excessive token consumption. By utilizing the max_token feature, users can specify their desired maximum ChatGPT token limit and ensure efficient token usage.

The default maximum token length is set at 2,048 tokens, but it can be adjusted up to a maximum of 4,096 tokens. This flexibility allows users to tailor the output length according to their specific requirements, striking a balance between generating desired responses and optimizing token utilization and credits.